3D Scene Understanding from Monocular Cameras

Bernt Schiele & Christian Wojek

3D Scene Understanding from Monocular Cameras

Scene understanding – a longstanding goal in computer vision

Inspired by the human visual system, visual scene understanding has been advocated as the “holy grail” for machine vision since its beginning. In the early days this problem was addressed in a bottom-up fashion, starting from low-level features such as edges and their orientation as the key ingredients in the derivation of a complete scene description and scene understanding. Unfortunately, the reliable extraction of such low-level features proved very difficult due to their limited expressive power and their inherent ambiguities. As a result and despite enormous efforts, scene understanding remained an illusive goal even for relatively constrained and simple scenes. Disappointed by these early attempts, the research community has turned to easier sub-problems and has achieved remarkable results in areas such as camera geometry, image segmentation, object detection, and tracking. As the performance for these sub-tasks starts to achieve remarkable performance levels, we believe that computer vision should reinvestigate the problem to automatically understand 3D scenes from still images and video sequences.

Robotics and automotive applications

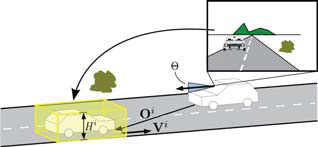

Robotics, such as mobile service robots, as well as automotive safety applications, such as pedestrian protection systems, are clearly of major scientific and commercial interest. Therefore, we use the recognition of pedestrians and cars from a moving camera mounted on a vehicle or robot as a test case and running example for our work. We can leverage domain knowledge for both application areas. The camera, for instance, can be calibrated relatively to its surrounding area, therefore, camera height above ground and the orientation, that is relatively to its surrounding area, are constrained and approximately known. Moreover, it can be assumed that the ground is fl at in a local neighborhood and that all objects are supported by it. Additionally, we include a Gaussian prior for pedestrian heights as well as for the heights of cars. These assumptions pose additional constraints to eliminate ambiguities and allow us to solve the scene understanding problem for such application domains more easily. See figure 1 for a sample scene.

Figure 1: Our system aims to infer a 3D scene based on a combination of prior domain knowledge with videos of a monocular camera.

Integrated 3D scene model

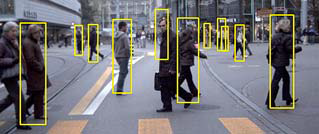

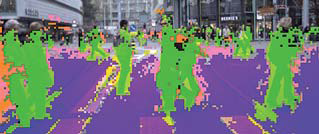

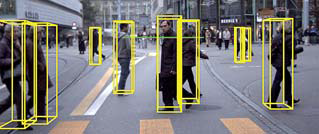

The system that we have developed combines the above prior domain knowledge with powerful state-of-the-art object class detectors, semantic scene labeling and the notion of tracklets. Object class detectors determine 2D object locations in the image, and semantic scene labeling infers semantic classes such as roads, the sky, or objects for each pixel, whereas tracklets, due to geometric and dynamic consistency over a longer period of time, accumulate image information over several frames to achieve a more robust reasoning (see fi gure 2 for sample detections, segmentation, and system results).

Figure 2: 2D detections, semantic scene labeling and results of our 3D scene estimation

By employing 3D reasoning, our model is able to represent complex interactions like inter-object occlusion, physical exclusion between objects, and geometric context. The results are encouraging and show that the combination of the individual components not only allows us to infer a 3D world representation but also improves the baseline given by the object class detectors. Throughout this research project we have used monocular cameras and are therefore not able to directly extract depth from the image disparity. Nonetheless, our approach is able to outperform similar systems that use a stereo camera setup.

Bernt Schiele

DEPT. 2 Bildverarbeitung und multimodale Sensorverarbeitung

Phone +49 681 9325-2000

Email schiele@mpi-inf.mpg.de

Christian Wojek

DEPT. 2 Bildverarbeitung und multimodale Sensorverarbeitung

Phone +49 681 9325-2000

Email cwojek@mpi-inf.mpg.de

Internetwww.d2.mpi-inf.mpg.de

monocular-3d-scene