Leveraging 3D Body Model for Training Data Generation

People detection is an important task for a wide range of applications in computer vision. State-of-the-art methods learn appearance based models requiring tedious collection and annotation of large data corpora. Also, obtaining data sets representing all relevant variations with sufficient accuracy for the intended application domain at hand is often a non-trivial task. Therefore in this project we investigate how 3D shape models from computer graphics can be leveraged to ease training data generation.

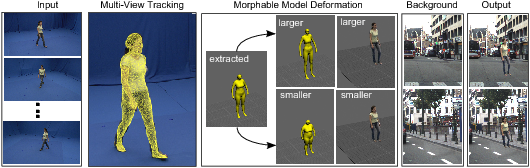

MovieReshape for data generation [1]

Here we employ a rendering-based reshaping method to generate a potentially unlimited number of photorealistic synthetic training samples from only a few persons and views.

To do so we ask subjects to perform movements in front of a uniformly colored background in our motion capture studio and use the extracted silhouettes to automatically fit the morphable 3D body model to the input sequences. We randomly sample from the space of possible 3D shape variations that is defined by the morphable body model. These shape parameters drive a 2D deformation of the image of the subject. In the last step, an arbitrary background is selected and is composited with the image of the deformed subject.

Our approach of data generation is fundamentally different to existing methods as the imployed parametric model captures the space of possible shapes of humans and does create only examples that are within this space, while deforming real images using the parametric model allows to generate training images with realistic appearance. Our people detection experiments on a challenging multi-view dataset indicate that the data from as few as eleven persons suffices to achieve good performance.

Generated synthetic data

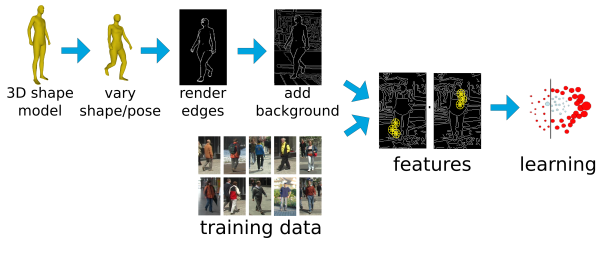

Combining 3D shape and appearance [2]

In this work we do not aim to use visually appealing and photo-realisticly rendered data but instead focus on complementary and particularly important information for people detection, namely 3D human shape. The main intuition is that it is important to enrich image-based training data with the data that contains complementary shape information and that this data is sampled from the underlying human 3D shape distribution.

We sample the 3D human shape space to produce several thousand synthetic instances that cover a wide range of human poses and shapes. Next, we render non-photorealistic 2D edge images from these samples seen from a large range of viewpoints and compute the low-level edge-based feature representation. Finally, we jointly train people detector on synthetic edge-based features and features extracted from real images. Experimental results reveal superior performance of combined synthetic and real training data over real data alone.

References

[1] Learning People Detection Models from Few Training Samples, L. Pishchulin, A. Jain, C. Wojek, M. Andriluka, T. Thormaehlen and B. Schiele, IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June, (2011)

[2] In Good Shape: Robust People Detection based on Appearance and Shape, L. Pishchulin, A. Jain, C. Wojek, T. Thormaehlen and B. Schiele, British Machine Vision Conference (BMVC), September, (2011)