Introduction

Introduction

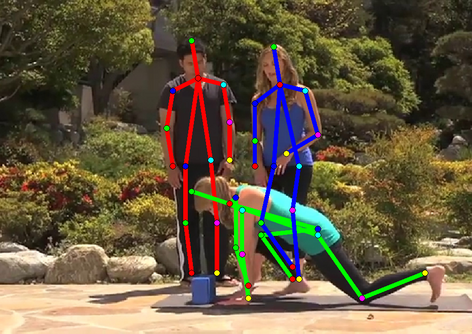

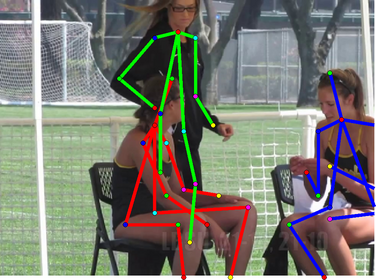

This work considers the task of articulated human pose estimation of multiple people in real world images. We propose an approach that jointly solves the tasks of detection and pose estimation: it infers the number of persons in a scene, identifies occluded body parts, and disambiguates body parts between people in close proximity of each other. This joint formulation is in contrast to previous strategies, that address the problem by first detecting people and subsequently estimating their body pose. We propose a partitioning and labeling formulation of a set of body-part hypotheses generated with CNN-based part detectors. Our formulation, an instance of an integer linear program, implicitly performs non-maximum suppression on the set of part candidates and groups them to form configurations of body parts respecting geometric and appearance constraints. Experiments on four different datasets demonstrate state-of-the-art results for both single person and multi person pose estimation.

Citing

@inproceedings{pishchulin16cvpr,

author = {Leonid Pishchulin and Eldar Insafutdinov and Siyu Tang and Bjoern Andres and Mykhaylo Andriluka and Peter Gehler and Bernt Schiele}

title = {DeepCut: Joint Subset Partition and Labeling for Multi Person Pose Estimation},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2016},

month = {June}

}

@inproceedings{insafutdinov16ariv,

author = {Eldar Insafutdinov and Leonid Pishchulin and Bjoern Andres and Mykhaylo Andriluka and Bernt Schiele}

title = {DeeperCut: A Deeper, Stronger, and Faster Multi-Person Pose Estimation Model},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2016},

month = {May}

}