A new approach enables, for the first time, the creation and control of photorealistic digital avatars based on input from a single, body-worn RGB camera.

Jianchun Chen, PhD student at MPI for Informatics and first author of "EgoAvatar" presents the research prototype of the head-worn camera-setup. Photo: Philipp Zapf-Schramm/MPI-INF

If digital meetings became indistinguishable from real-life interactions, it could fundamentally change telecommunications. However, this requires accessible, hardware-efficient techniques that allow users to generate realistic virtual avatars. A research team involving the Max Planck Institute for Informatics, the Saarbrücken Research Center for Visual Computing, Interaction and Artificial Intelligence (VIA), and Google has now developed an approach that, for the first time, creates lifelike digital representations from the input of a single, head-mounted camera.

“The biggest technical challenge is combining two aspects: First, creating an avatar that captures and mirrors a real person’s appearance and movement as precisely as possible. Second, achieving this with minimal hardware, from a first-person perspective, using only a single head-mounted camera,” explains Jianchun Chen, first author of the study and a doctoral researcher at the Max Planck Institute for Informatics and Saarland University. Previous approaches addressed only partial aspects of this challenge—focusing either solely on head avatars, relying on complex multi-camera setups to create avatars, or failing to achieve a photorealistic representation. “Our approach is the first to combine photorealistic animation with so-called egocentric motion capture in a single method. This is an important step toward immersive VR telepresence,” says Professor Christian Theobalt, Scientific Director at the Max Planck Institute for Informatics and Founding Director of the VIA Center.

The method, named “EgoAvatar,” is still a basic research project. Currently, the approach remains person-specific, meaning that an individual model must first be created for each user in a two-step process. This process takes place at the Motion-Capture Studio at the Max Planck Institute for Informatics, where the system captures detailed movement patterns and the interaction between body, clothing, and lighting conditions. Based on this data, a model is generated that learns how a specific person moves and how their clothing responds to different motions.

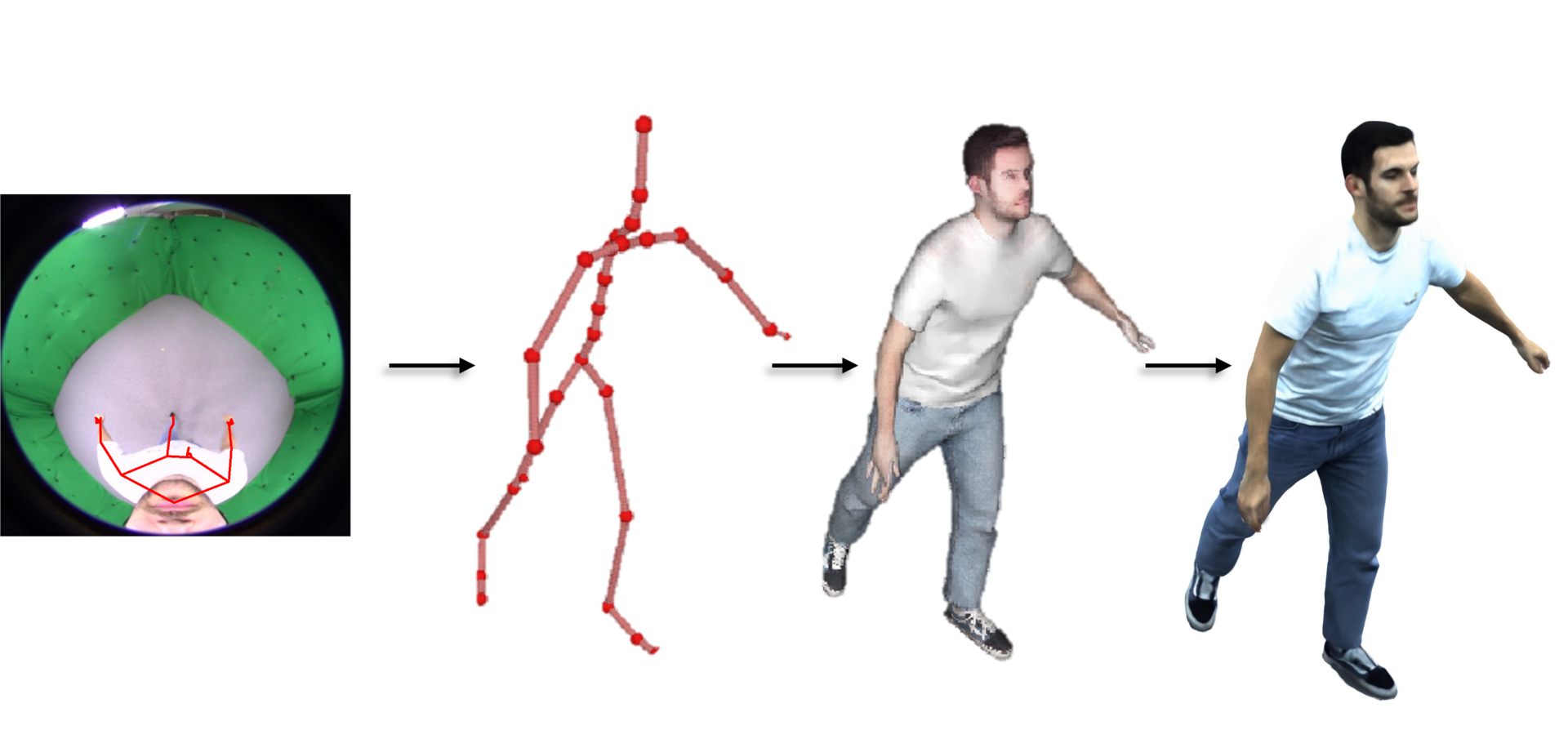

Using this model as a foundation, EgoAvatar can then reconstruct a person’s appearance and movement solely from a single, downward-facing body camera. The core of the research is a multi-step process: first, body movements are analyzed and converted into a precise, controllable skeletal representation. Next, a 3D reconstruction is generated that realistically captures both body shape and fine details of clothing and surface textures. During this process, the pre-trained model is refined to align exactly with the egocentric camera perspective and produce lifelike movements. Finally, the digital representation is photorealistically rendered—with realistic textures, and materials, allowing the avatar to be viewed from any angle.

The research team evaluated and tested its method using a challenging, self-developed benchmark. In this process, the avatars reconstructed from a single-camera feed were systematically compared with high-precision 3D reference models captured by a 120-camera setup. The results demonstrate that EgoAvatar produces more accurate motion reconstructions and more realistic visual details than previous methods.

The new method combines several interlinked developments from the Max Planck Institute for Informatics into a novel approach. “Our long-term goal is to eliminate the need for the extensive pre-training phase,” says Theobalt. This advancement could eventually enable more accessible photorealistic avatars for virtual meetings, gaming, or digital actors.

The research team presented its paper, “EgoAvatar: Egocentric View-Driven and Photorealistic Full-body Avatars,” at the 2024 ACM SIGGRAPH Conference and Exhibition on Computer Graphics and Interactive Techniques in Asia (SIGGRAPH ASIA), which took place from December 3 to 6, 2024, in Tokyo, Japan. SIGGRAPH ASIA is one of the world’s leading conferences in computer graphics and visual computing.

EgoAvatar is a collaborative project between the “Visual Computing and Artificial Intelligence” department at the Max Planck Institute for Informatics and the Saarbrücken Research Center for Visual Computing, Interaction and Artificial Intelligence (VIA). The project is part of a strategic research partnership with Google, launched in 2022, which was also involved in this study.

Original Publication:

Chen, J., Wang, J., Zhang, Y., Pandey, R., Beeler, T., Habermann, M., Theobalt, C. (2024): “EgoAvatar: Egocentric View-Driven and Photorealistic Full-body Avatars.” 17th ACM SIGGRAPH Conference and Exhibition on Computer Graphics and Interactive Techniques in Asia (SIGGRAPH ASIA). https://dl.acm.org/doi/10.1145/3680528.3687631

Contact and Editor:

Philipp Zapf-Schramm

Max Planck Institute for Informatics

Phone: +49 681 9325 5409

Email: pzs@mpi-inf.mpg.de