Human Motion from Video and IMUs

The recording of human motion is necessary for modelling, understanding and automatically animating full-body human movement. Traditional marker-based optical Motion Capture (MoCap) systems are intrusive and restrict motions to controlled laboratory spaces. Therefore, simple daily activities like biking, or having coffee with friends cannot be recorded with such systems. Image based motion capture methods offer an alternative, but they are still not accurate enough, and require direct line of sight with the camera.

To address these issues and to be able to record human motion in everyday natural situations, we leverage Inertial Measurement Units (IMUs), which measure local orientation and acceleration. IMUs provide cues about the human motion without requiring external cameras, which is desirable for outdoor recordings where occlusions occur often.

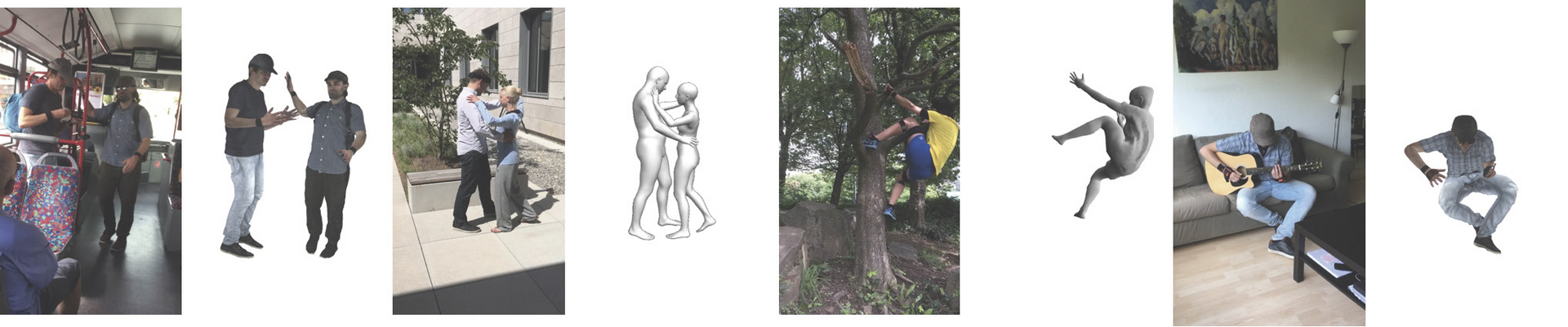

Human Pose from 6 IMU: Existing IMU systems are intrusive because they require a large number of sensors (17 or more), worn on the body. In previous work, Figure -left, we have demonstrated a space-time optimization based approach which can recover full body motion from only 6 IMUs attached to wrists, lower-legs waist and head.

Real-time Human Pose from 6 IMU: While less intrusive, SIP is inherently offline, which limits a lot of applications. In recent work, Figure 1-right, we present a Deep Learning based real time algorithm for full body reconstruction from 6 IMUs alone. We found that propagation of information forward and backward in time is crucial for reconstructing natural human motion, for which we use a bi-directional Recursive Neural Network. We learn from synthetic IMU data and generalize to real data with transfer learning.

Visual-Inertial Human Pose: In contrast to visual measurements, IMU cannot provide absolute joint position information. This makes pure IMU based methods inaccurate for certain types of motions. Hence, in recent work, we introduce VIP, which combines IMUs and single a moving camera, to robustly recover human pose in challenging outdoor scenes. The moving camera, sensor heading drift, cluttered background, occlusions and many people visible in the video make the problem very hard. We associate 2D pose detections in each image to the corresponding IMU equipped persons by solving a novel graph based optimization problem that forces 3D to 2D coherency within a frame and across long range frames. Given these associations, we jointly optimize the pose of the SMPL body model, the camera pose and heading drift using a continuous optimization.

Using VIP, we collected the 3DPW dataset, which includes videos of humans in challenging scenes with accurate 3D parameters that provide, for the first time, the means to quantitatively evaluate monocular methods in difficult scenes and stimulate new research in this area, see Figure2.

Gerard Pons-Moll

DEPT. Computer Vision and Machine Learning

Phone +49.681.9325-2135

Email: gpons@mpi-inf.mpg.de