Linear Algebra Primitives for Geometry Processing: Really Affordable High Performance

Geometric data lies at the heart of key applications, spanning from artistic design to scientific computing, from nanoscience to geoengineering. The ability to process and perform numerical computations efficiently on such data is key to enhanced performance which would benefit the workflow across all these fields. High performance has been, for a long time, limited to the use super computers, but as the computing landscape is drastically changing towards ubiquitous parallelism, cheaper multiple core processors and powerful graphics hardware encompassing thousands of core (GPUs) have become accessible to the large public. Unfortunately, the computational power delivered by these powerful units remains largely underexploited, especially, when dealing with geometric data. Performance gains are often limited to embarrassingly parallel task or require dedicated platform-specific engineering efforts for each single algorithm. Our research aims to streamline geometry processing operations from the ground up in an intuitive and platform-independent manner.

Arguably the most common geometric representations are meshes. Their ability to capture arbitrary free form surfaces has long made them a versatile cross-disciplinary representation. Direct access to connectivity information and traversal has long required extensive linked list data structures known by variants such as winged-edge or half-edge. On modern high performance computing hardware, the conventional wisdom behind these intermediate structures is challenged by costly memory access, and more importantly by prohibitive memory resources on environments such as graphics hardware. There is therefore a pressing need for rethinking the mesh interfacing problem radically.

In our view, the key to supplying a simple interface lies in choosing the right abstraction level. Our aim is to provide a representation that is familiar to a majority of practitioners and new comers and to allow them to manipulate it in an intuitive way. Our algorithmic formulation is centered around objects like matrices, vectors and permutations, and acts by means of operations like sparse matrix-vector multiplication, sparse matrix-matrix multiplication, and maps.

This linear algebra flavored representation serves several purposes:

- Compactness and readability. Algorithms are concise and easy to interpret. Dispensing with intermediate data structures reduces code bloat and broadens accessibility.

- Reusability. The same machinery used for numerical optimization can be used for mesh processing.

- Performance. Data access patterns can deeply weigh on cache and memory related performance. Array-based algorithms bring forward data access patterns and can be readily optimized.

On the practical level, our sparse matrix representation for arbitrary unstructured grids, aka mesh matrix, not only reduces the memory storage requirements but also cuts down on the bulk of data movement from global storage to the compute units. With this representation in mind, high performance gets channeled through linear algebra kernels. The compactness and effectiveness of this representation makes allows encoding complex operation in simple, humanly readable, and highly performing code. A typical example is demonstrated in Figure 1.

Our strategy for deployment throughout applications focuses on multiple aspects:

Direct Adaption: Many existing algorithms are simple enough that they can be almost literally translated into the language of linear algebra. In this respect, the performance gains are instantaneous and the learning curve is almost flat.

Algorithmic reinterpretation: More elaborate algorithms which involve substantial connectivity changes cannot be directly translated. In order to unleash computing performance gains, a reinterpretation of existing algorithms in the light of the linear algebra formalism is necessary.

Typical examples from our research include subdivision surfaces which are widely used in modeling and have become an indispensable production tool in the feature film industry. Our approach largely outperforms OpenSubdiv, largely considered as state-of-the-art across industry and academia alike.

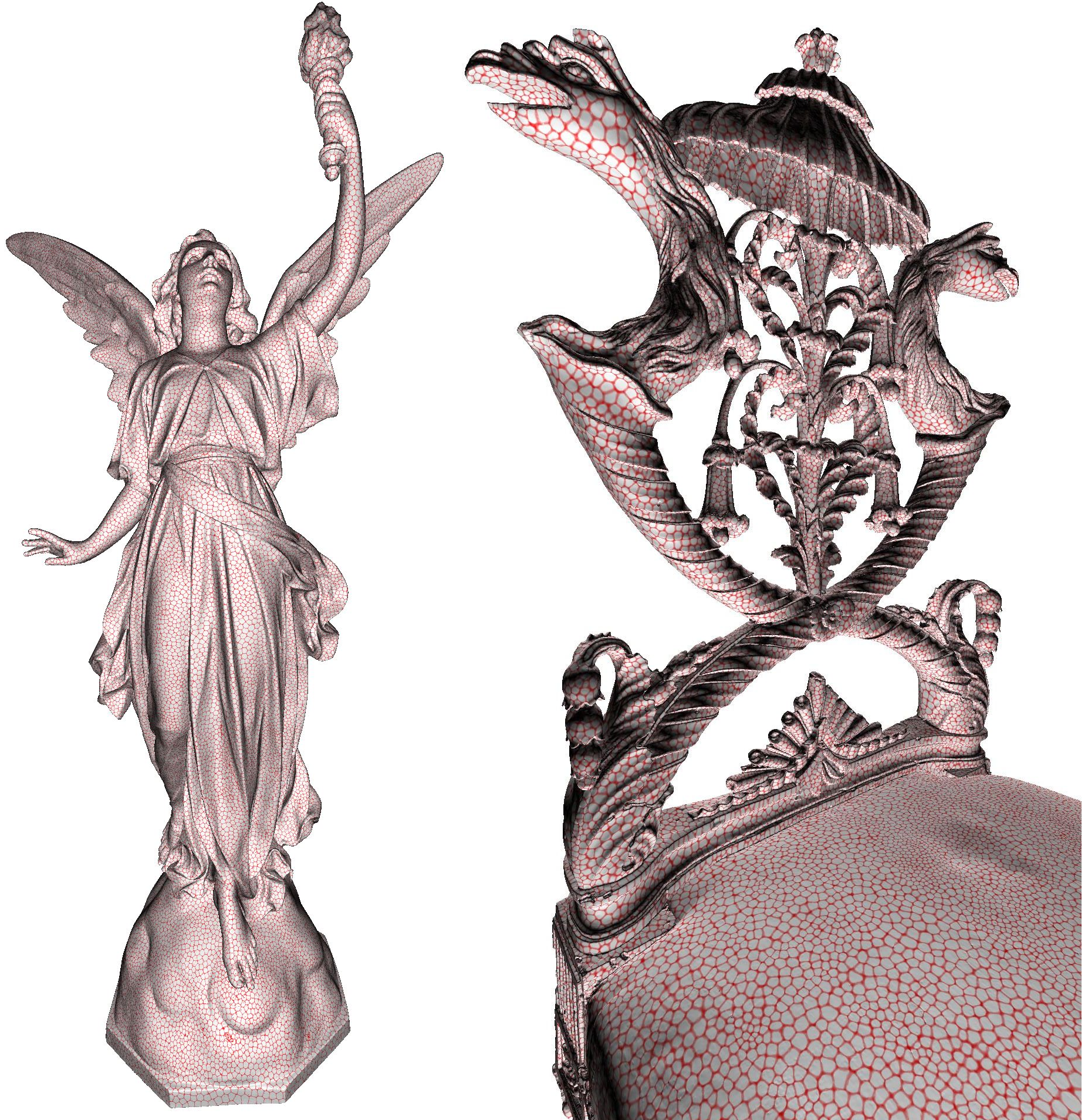

Novel Abstraction: Many existing algorithmic solutions find their roots in early days of computing where the hardware of the day dictated the nature of successful algorithms. As a result, the serial nature of some original algorithmic abstraction is rarely put into question. Efforts to gain performance in these settings generally encounter little success. In this case, a re-abstraction of the problem itself is necessary. A typical example is the generation of Voronoi diagrams (VD) on surfaces and their variants such as centroidal Voronoi tessellations (CVT) as obtained using Lloyd's algorithm. Our solution re-abstracts the problems as a natural partition which emerges as the solution of a time dependent set of partial differential equations describing concurrently evolving fronts on the surface as illustrated in Figure 2. In this way large complex geometric models can be processed efficiently on graphics hardware yielding unprecedented results as shown in Figure 3. An additional example is the matrix assembly in the finite element analysis (FEA). This operation is fundamental for collecting elementary contributions within the global system matrix. Our re-abstraction of the problem allows casting the assembly problem as a sparse matrix-matrix multiplication. In this way, we directly tap into the powerful machinery of linear algebra and achieve considerable performance gains.

Figure 2: Illustration of the growth of natural tessellations (an alternative numerical model to Voronoi diagrams on surfaces) on the Earhart's Flight Suit (10M facets mesh, courtesy of the Smithsonian Institution) initialized with 10 000 random seeds, their corresponding cells are visualized in different colors.