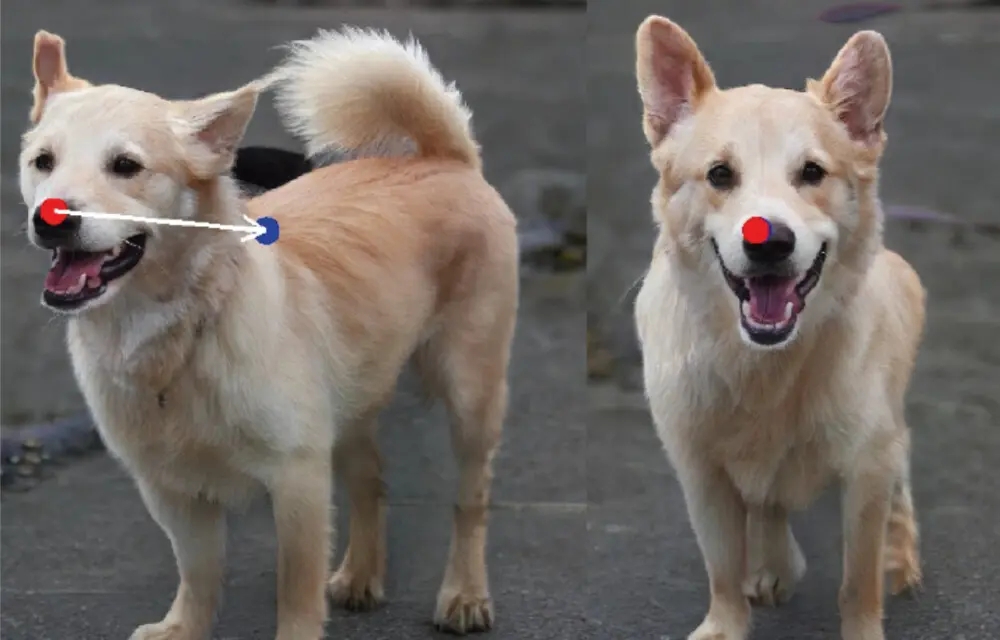

With the "DragGAN" tool, the direction of the dog's gaze in the photo can be adjusted retroactively.

Imagine being able to try on different clothes on a virtual avatar and see how they look from every angle. Or adjusting the direction your pet is looking in your favorite photo. You could even change the perspective of a landscape picture. These types of photo edits have always been challenging, even for experts. A novel AI tool now promises that with just a few mouse clicks, anyone can achieve edits like these effortlessly. The method is being developed by a research team led by the Max Planck Institute for Informatics in Saarbrücken, in particular by the Saarbruecken Research Center for Visual Computing, Interaction, and Artifical Intelligence (VIA) located there.

This groundbreaking method has the potential to revolutionize digital image processing. “With ‘DragGAN,’ we are currently creating a user-friendly tool that allows even non-professionals to perform complex image editing. All you need to do is mark the areas in the photo that you want to change and specify the desired edits in a menu. Thanks to the support of AI, with just a few clicks of the mouse anyone can adjust things like the pose, facial expression, direction of gaze, or viewing angle, for example in a pet photo,” “, explains Christian Theobalt, Managing Director of the Max Planck Institute for Informatics, Director of the Saarbrücken Research Center for Visual Computing, Interaction, and Artifical Intelligence, and Professor at Saarland University at Saarland Informatics Campus.

This is made possible through the use of artificial intelligence, specifically a type of model called “Generative Adversarial Networks” or GANs. “As the name suggests, GANs are capable of generating new content, such as images. The term “adversarial” refers to the fact that GANs involve two networks competing against each other,” explains Xingang Pan, a postdoctoral researcher at the MPI for Informatics and the first author of the paper. A GAN consists of a generator, responsible for creating images, and a discriminator, whose task is to determine whether an image is real or generated by the generator. These two networks engage in a competition are trained until it reaches a point where the generator produces images that the discriminator cannot differentiate from real ones.

There are many uses for GANs. For example, besides the obvious use case of image generator, GANs are good at predicting images: this allows for so-called video frame prediction, which can reduce the data requirements for video streaming by anticipating the next frame of a video. Or they can upscale low-resolution images, improving image quality by computing where the additional pixels of the new images should go.

“In our case, this property of GANs proves advantageous when, for example, the direction of a dog’s gaze is to be changed in an image. The GAN then basically recalculates the whole image, anticipating where which pixel must land in the image with a new viewing direction. A side effect of this is that DragGAN can calculate things that were previously occluded by the dog’s head position, for example. Or if the user wants to show the dog’s teeth, he can open the dog’s muzzle on the image, ” explains Xingang Pan. DragGAN could also find applications in professional settings. For instance, fashion designers could utilize its features to make adjustments to the cut of clothing in photographs after the initial capture. Additionally, vehicle manufacturers could efficiently explore different design configurations for their planned vehicles. While DragGAN works on diverse object categories like animals, cars, people and landscapes, most results are achieved on GAN-generated synthetic images. “How to apply it to any user-input images is still a challenging problem that we are looking into,” adds Xingang Pan.

Just a few days after the release of the preprint, the new tool from the Saarbrücken-based computer scientists is already causing a stir in the international tech community and is considered by many the next big step in AI-assisted image processing. While tools like Midjourney can be used to create completely new images, DragGAN could massively simplify their post-processing.

The new method is being developed at the Max Planck Institute for Informatics in collaboration with the “Saarbrücken Research Center for Visual Computing, Interaction, and Artificial Intelligence (VIA)”, which was opened there in partnership with Google. The research consortium also includes experts from the Massachusetts Institute of Technology (MIT) and the University of Pennsylvania.

In addition to Professor Christian Theobalt and Xingang Pan, contributors to the paper titled “Drag Your GAN: Interactive Pointbased Manipulation on the Generative Image Manifold” were: Thomas Leimkuehler (MPI INF), Lingjie Liu (MPI INF and University of Pennsylvania), Abhimitra Meka (Google), and Ayush Tewari (MIT CSAIL). The paper has been accepted by the ACM SIGGRAPH conference, the world’s largest professional conference on computer graphics and interactive technologies, to be held in Los Angeles, August 6-10, 2023.

Various image edits performed using the DragGAN method.

Further information:

Original publication (preprint):

Xingang Pan, Ayush Tewari, Thomas Leimkuehler, Lingjie Liu, Abhimitra Meka, and Christian Theobalt. 2023. Drag your GAN: Interactive Pointbased Manipulation on the Generative Image Manifold. In Special Interest Group on Computer Graphics and Interactive Techniques Conference Proceedings (SIGGRAPH ’23 Conference Proceedings), August 6-10, 2023, Los Angeles, CA, USA. ACM, New York, NY, USA, 11 pages. doi.org/10. 1145/3588432.3591500 https://arxiv.org/pdf/2305.10973.pdf

Project website including a video demo: https://vcai.mpi-inf.mpg.de/projects/DragGAN/

Questions can be directed at:

Prof. Dr. Christian Theobalt

Max Planck Institute for Informatics

Tel.: +49 681 9325 4500

Email: theobalt@mpi-inf.mpg.de

Background Max Planck Institute for Informatics:

The Max-Planck-Institute for Informatics in Saarbrücken is one of the world’s leading research institutes in Computer Science. Since the institute’s establishment in 1990 it has researched the mathematical foundations of information technology in the areas of algorithms and complexity, as well as logic of programming. At the same time researchers at the institute have developed new algorithms for various application areas such as databases and information systems, program verification, and bioinformatics. Basic research in visual computing, i.e. computer graphics and computer vision, at the intersection to artificial intelligence and machine learning, is also an important focus of the institute. With publications at the highest level and the education of excellent young researchers, the MPI for Informatics plays a major part in advancing basic research in computer science.

Background “Saarbrücken Research Center for Visual Computing, Interaction and Artificial Intelligence” (VIA):

The “Saarbrücken Research Center for Visual Computing, Interaction and Artificial Intelligence (VIA)” is a strategic research partnership between the MPI for Informatics and Google and conducts basic research in cutting-edge areas of computer graphics, computer vision and human-machine interaction at the interface of artificial intelligence and machine learning. The center works closely with Saarland University and the numerous, internationally renowned computer science research institutions at the Saarland Informatics Campus.

Background Saarland Informatics Campus:

900 scientists (including 400 PhD students) and about 2500 students from more than 80 nations make the Saarland Informatics Campus (SIC) one of the leading locations for computer science in Germany and Europe. Four world-renowned research institutes, namely the German Research Center for Artificial Intelligence (DFKI), the Max Planck Institute for Informatics, the Max Planck Institute for Software Systems, the Center for Bioinformatics as well as Saarland University with three connected departments and 24 degree programs cover the entire spectrum of computer science.

Editor:

Philipp Zapf-Schramm

Saarland Informatics Campus

Telefon: +49 681 302-70741

E-Mail: pzapf@cs.uni-saarland.de